Designing AI Chips: Key Considerations for Building Intelligent Hardware

I’ve been writing a lot about AI lately, but for some good reasons. AI seems to be dominating a lot of public discussion, both inside and outside the tech world, so it’s natural to address it. Many electronic design automation (EDA) vendors, including Agnisys, are including AI in our solutions. We’re also seeing more and more customers designing innovative chips to provide hardware support for AI algorithms. Let’s talk about the implications of this for your development teams.

Power Is Key

Companies are designing their own dedicated chips to increase the speed at which AI applications can tackle the problems thrown at them, as well as their capacity for ever larger problems. It is entirely possibly to run AI algorithms on any standard processor, and for relatively simple applications this may suffice. However, for the harder problems software alone can’t provide answers in real time, so moving some algorithms into hardware is required.

If that sounds familiar, it should. Early computers had single processors that handled everything. When the demand for computing power grew faster than new generations of silicon could evolve, designers started moving specific functionality to adjunct processors. The ubiquitous term “central processing unit” (CPU) only makes sense if there are other processing units in use. I/O controllers and direct access memory (DMA) units are two very common examples.

Perhaps the best comparison to today’s situation with AI is what happened with computer graphics. As screens moved from monochromatic fixed-width text terminals to bit-mapped full-color displays, CPUs needed a lot of help. This led to graphics processing units (GPUs) with 3D rendering and other advanced capabilities. Today many GPUs are much larger than the CPUs in the same system. AI processing chips seem to be following a similar path.

Flexibility is Key

Many AI applications are based on machine learning (ML), which is a different approach to computing than traditional fixed algorithms. ML builds a database of “learnings” over its usage, so that each decision is informed by the history of previous decisions. In theory, this is similar to the way that humans gain experience and use it to make better decisions. We can argue about whether computers can actually “learn” anything, but it seems like a reasonable term to describe how ML works.

The really interesting thing about ML is that AI can act in ways its developers had not anticipated. True, worrying about this leads some to fear that AI will take over the world and subjugate or eliminate us humans. But this adaptability is also what produces unexpected beneficial results. Many hope that AI will be able to find cures for terrible diseases, make fusion energy practical, or solve other problems that might take generations to be solved by humans—if ever.

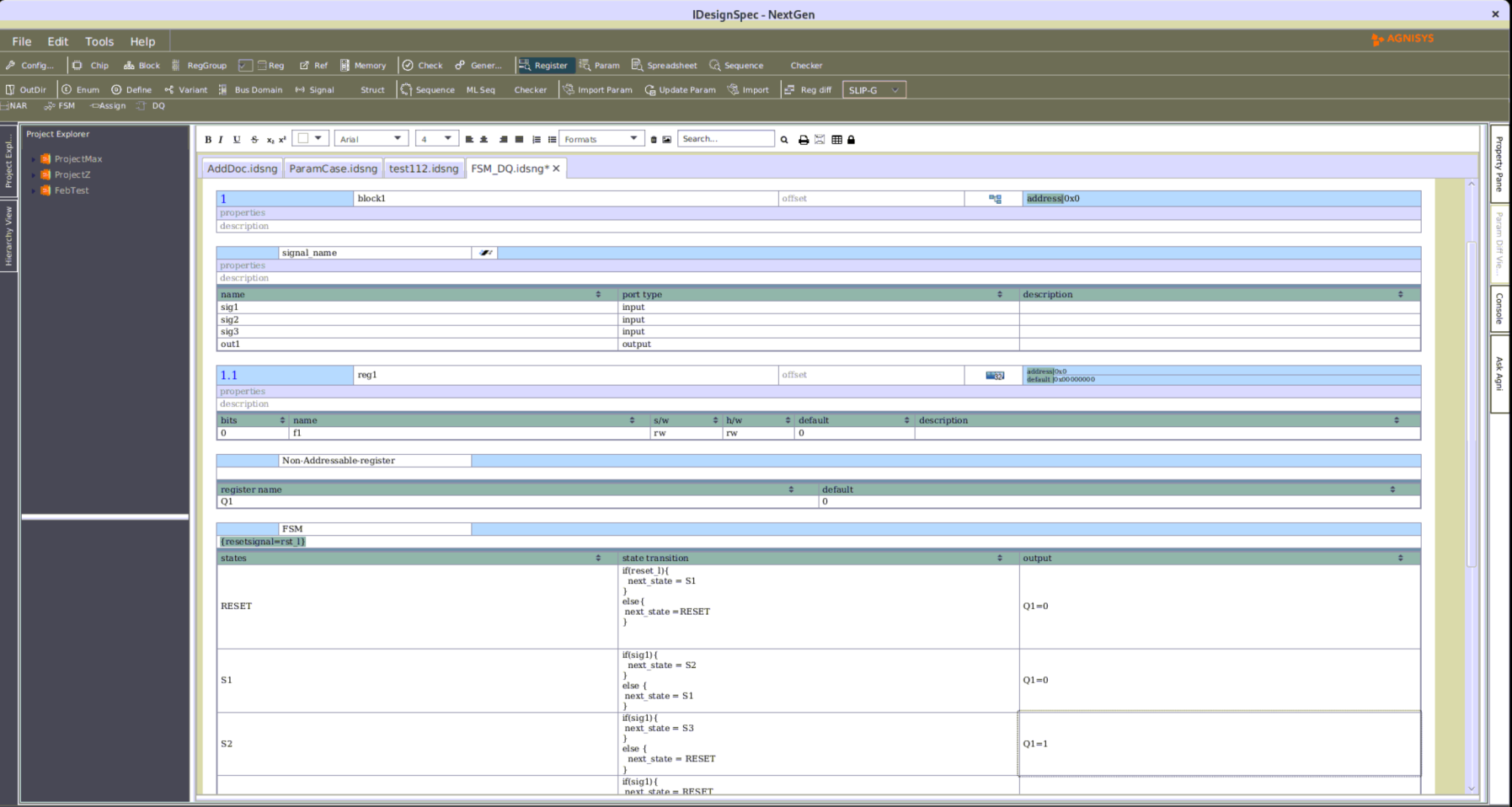

To produce optimal results, ML and AI models have many parameters that can be tweaked. Many types of weights, layers, mathematical operations, and activation functions are too variable to be implemented in fixed hardware. AI chips need to be flexible enough so that all these parameters can be set for the problem being solved, and even changed on the fly. A rich set of configuration and status registers (CSRs) provides the required flexibility and dynamic adaptability

Capacity Is Key

Since so many AI applications must respond in real time, performance is critical. I mentioned earlier that one way to make processors faster is offloading functionality to other processors, and you’re doing that with our dedicated AI chips. This is a form of parallel processing, and adding cores or employing distributed processing is also a way to increase performance. Software AI solutions run faster on modern CPUs with dozens of cores.

Hardware-based AI can take advantage of massive parallelism, and many AI chips provide hundreds or thousands of processing elements. Again, this sounds a lot like graphics processing, and in fact it turns out that GPUs make good platforms for some types of ML/AI-based applications. Some tasks in AI algorithms, most notably multiply-accumulate operations, occur commonly and can be executed on a massively parallel processor design.

ML performance also depends on fast access to the database, which must have the capacity to hold a huge amount of information. Massive amounts of high-bandwidth memory are essential. AI chips must also provide fast I/O channels for getting data on and off chip as quickly as possible to meet real-time requirements. This includes gathering data from sensors that provide real-world data, such as the traffic surrounding an autonomous vehicle.

Agnisys Is Key

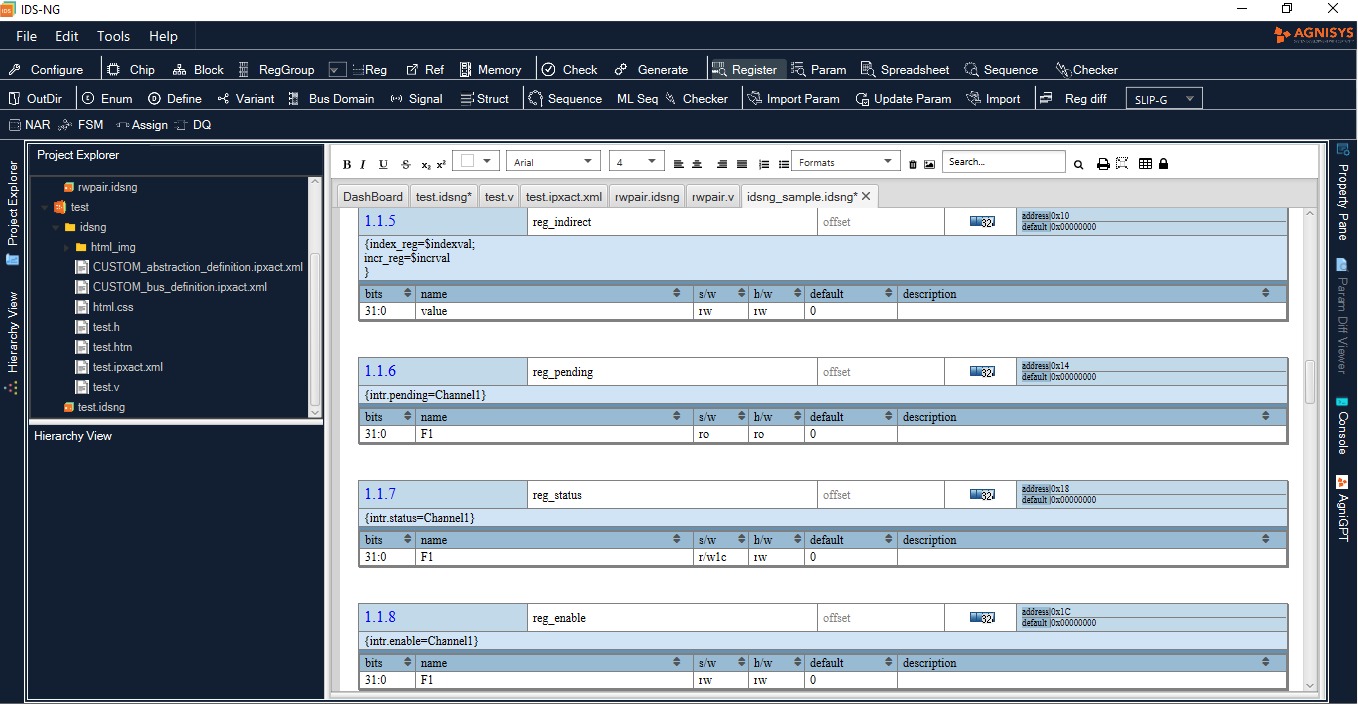

Our specification automation solutions can do a lot to help you design your AI chip. Our IDesignSpec™ (IDS) Suite is the industry’s most established and trusted way to automatically generate design, verification, software, validation, and documentation files for your CSRs from a wide range of industry-standard specification formats. We also generate any required bus interfaces, clock-domain-crossing (CDC) logic, and functional safety mechanisms.

AI chips typically contain a rich mix of IP for standard functions and custom blocks with proprietary logic. IDS-IPGen™ helps you develop your custom blocks, including finite state machines (FSMs), from executable specifications. For the standard blocks, our Silicon IP Portfolio generates designs of interfaces for popular industry buses and well as functions such as AES, DMA, GPIO, I2C, I2S, PIC, PWM, SPU, Timer, and UART.

IDS-Integrate™ automatically generates the complete top-level design of your AI chip, connecting together all your standard and custom blocks. We also generate any bus multiplexors, aggregators, and bridges required between the blocks. This pushbutton process is exactly what you need as you explore different top-level architectural choices for your AI chip. To try a new configuration, just update your connection specification and run IDS-Integrate again.

Learning More

I have only been able to touch on a few key points regarding building AI chips in this post. As I noted at the beginning, I’ve talked about this topic quite a bit. If you want to read more, I’ve collected links to all my previous AI-related blog posts:

If you’re not designing AI into your chips, you will be soon. When that time comes or if it’s already here you can trust us to help you succeed. We look forward to working with you.