Using IDesignSpec to Help Build an AI Chip

If I’ve been blogging a lot about artificial intelligence (AI) recently, there are several good reasons for this. Of course, AI is one of the hottest topics both in engineering journals and in mainstream publications. AI is improving many industries, including our domain of electronic design automation (EDA). Further, in an interesting twist, EDA tools enhanced by AI are being used to design, verify, and validate the huge, complex chips used for AI applications.

The Etched Example

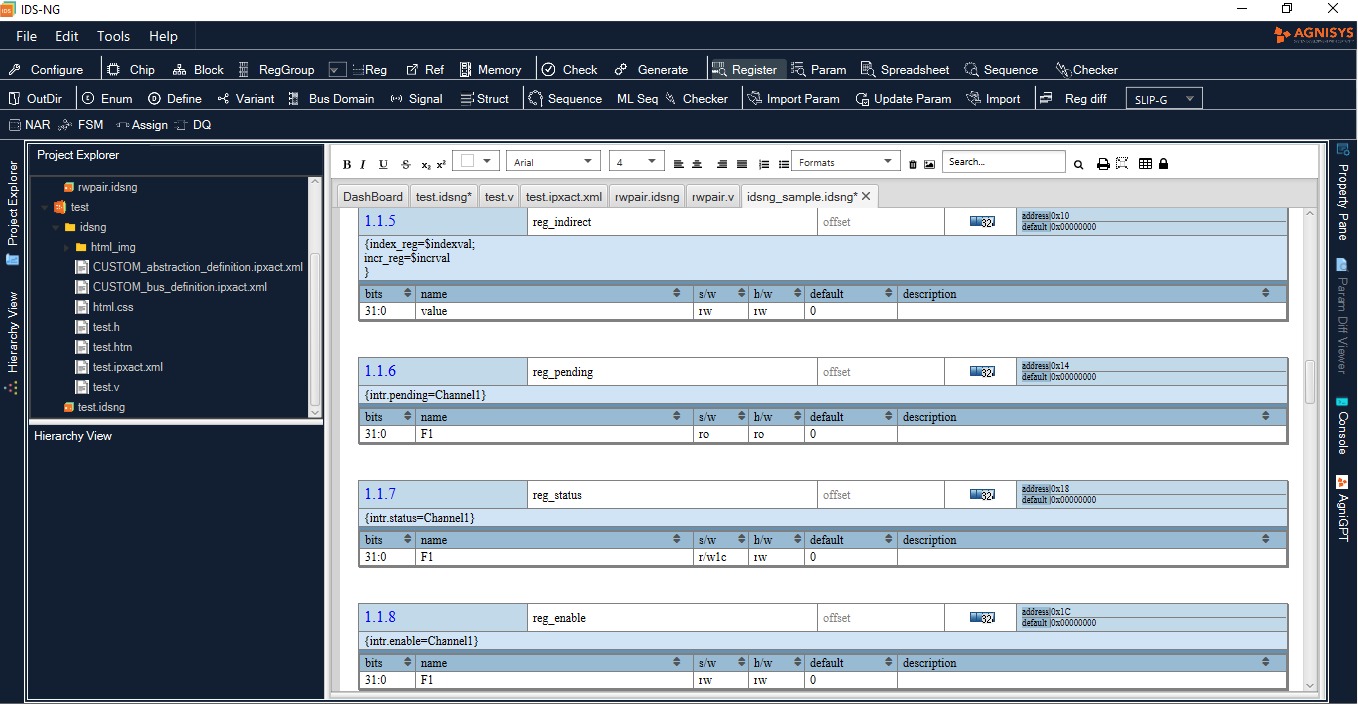

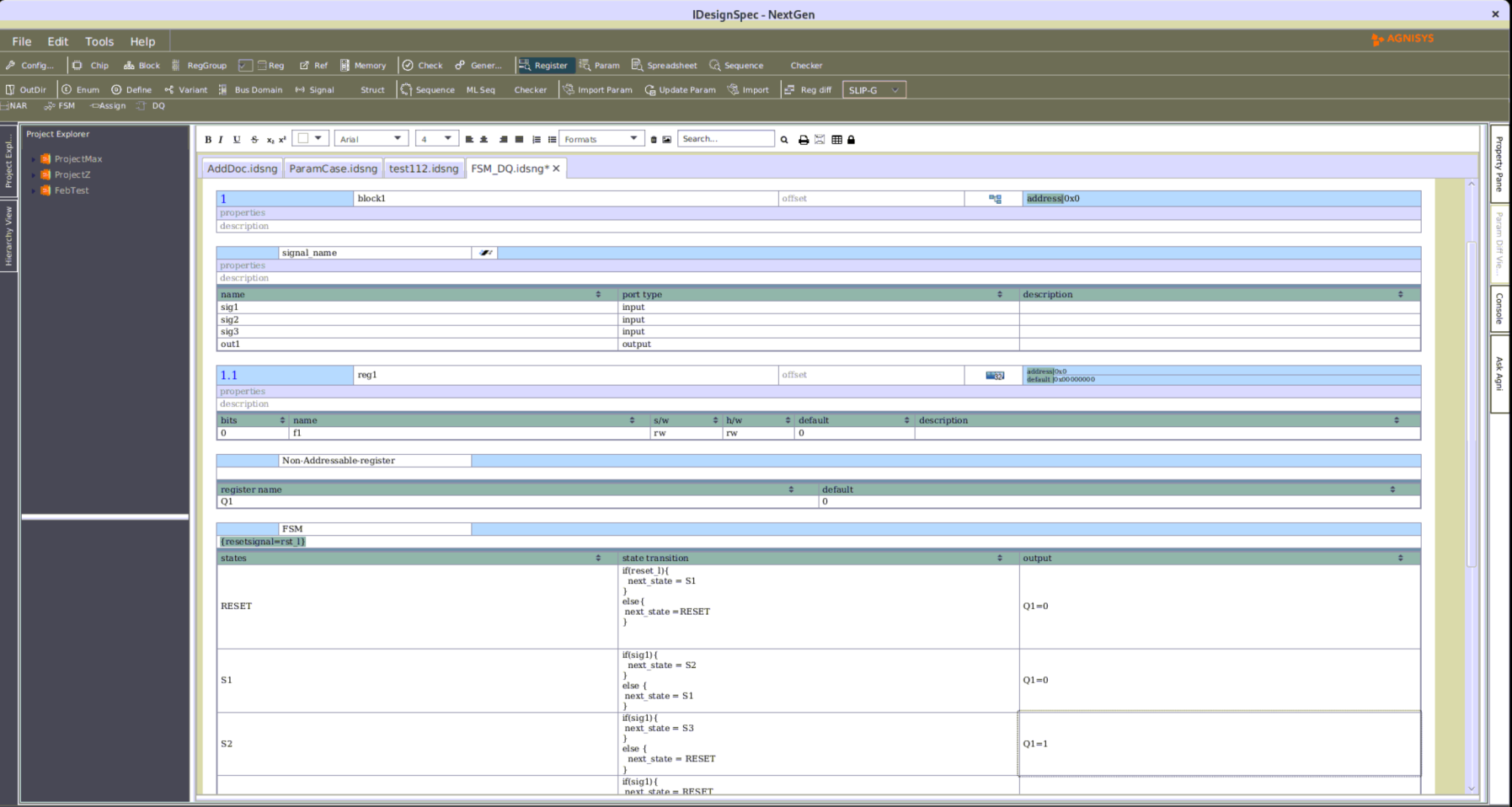

Agnisys has numerous customers designing AI chips, but of course we can’t identify them without their permission. We’re delighted that Etched, a cutting-edge player in AI-specific hardware, has publicly announced that they’re using our IDesignSpec™ Suite to help develop their novel transformer chips. They are using both our tools and our associated intellectual property (IP) designs to enhance their development process with specification automation, our area of expertise.

Etched Director of TPM Joon Kim says that our “powerful” solution “automates our specification-driven workflow, allowing us to work more efficiently and eliminate risks associated with manual processes.” That’s exactly why Agnisys exists. By automating the generation of files needed to design, verify, validate, and document complex chips, we allow our users to focus on adding value to their own products rather than spending time on repetitive manual tasks.

Kim also says, “we evaluated the option of using free/open-source and other commercial solutions but chose Agnisys for its comprehensive functionality and world-class 24/7 365-day support, which is paramount when rapid go-to-market is the aim.” I think this also hits the nail on the head. There are other specification automation tools available, especially for registers, but no one else even comes close to the comprehensive solution that we provide.

What is a Transformer?

In an AI transformer, text is converted into tokens that are contextualized with other tokens so that key tokens are amplified and minor tokens are reduced. Word order is taken into account so that, for example, “cat chases mouse” and “mouse chases cat” are not modeled the same. The transformer contains several main components:

- Tokenizers, which convert text into tokens

- The embedding layer, which converts tokens and their positions into vectors

- Transformer layers, which perform repeated transformations on the vectors to extract linguistic information

- The un-embedding layer, which converts the final vectors to a probability distribution over the tokens

Mapping these components into hardware provides the best possible performance and scalability. Unlike earlier types of AI models such as recurrent neural architectures (RNNs), transformers have no recurrent units, so they require less training time. This is important since training on massive language datasets for large language models (LLM) is a sizable portion of the development cost. Modern transformers use a combination of pretraining and fine-tuning.

Transformers have proven useful in a surprisingly wide range of AI applications. These include natural language processing (NLP), language translation, computer vision, audio processing, robotics, and multiple forms of machine learning (ML) and deep learning (DL). Many of today’s leading AI solutions are based on this technology. In fact, the “T” stands for “transformer” in ChatGPT, which uses OpenAI’s generative pre-trained transformer (GPT) models.

A Game-Changer AI Chip

Etched describes their Sohu chip as “the world’s first transformer ASIC” and presents it as a faster alternative to graphics processing units (GPUs) for many AI applications. The key to the Etched approach is that they burn the transformer architecture directly into silicon. This enables the models to run with much higher throughput and to handle next-generation models with a trillion parameters or more.

I encourage you to read a fascinating blog post from Etched that discusses their approach and why they chose it. They are clear that their chip is made for transformers and that it can’t run many other types of AI models. The advantage of this specialization is that a great deal of control logic and programmability can be eliminated, allowing many more math blocks to be included. This seems to be a key reason why their performance is so impressive.

Etched says that focusing on transformers also makes software development easier. AI chip developers no longer have to hire teams of kernel experts to wring every last bit of performance out of hardware designed for multiple types of models. Etched makes the bold statement that “our software, from drivers to kernels to the serving stack, will be open source.” They are taking a truly daring and innovative approach, and we’re excited to be helping them on their journey.

Conclusion

Ever since I first talked about “AI for EDA for AI” I’ve been fascinated by this topic. We have now successfully integrated AI solutions into our tools and methodologies, and we have helped customers develop some really sophisticated chips for AI applications. I can promise you that we’ll have a bunch of interesting stories coming up over the next months and years. Please stay tuned!