Does UVM sometimes make you feel stupid?

Somewhere in the deep trenches of a UVM-based verification project, an engineer teeters on the verge of insanity.

As the saying goes, the faint of heart need not attempt UVM-based verification. But what makes it so challenging to learn and adopt UVM in real life?

First off, it is assumed that anybody who attempts to use UVM has a firm grasp of the verification-specific features of SystemVerilog. It is a big leap for anyone to get their head around the concepts of OOP –classes, extensions, virtual classes virtual methods, and polymorphism. Add to that constraints, coverage, cover-groups and cover-points, randomization, scoreboards, interfaces, and virtual interfaces to the mix, before one can even begin to delve into the basics of UVM.

And then there is the UVM base class library itself. To the uninitiated, it is a large library to understand.

There are about 150 SV code files, 460 classes, 40 enums, 440 macros, and 1650 unique functions! Of course, we can’t forget Transaction Level Modelling (TLM) and Phases, can we? Very quickly, we realize that understanding the interactions of these classes and methods and solving the complex verification challenges is a formidable task.

But have no fear, you say –after all, we are talking about the Universal Verification Methodology. Universal, as in all EDA tool vendors complies strictly with the same laws, by-laws, and guidelines. However, in practice, we discover that various sub-methodologies or idioms can set things up and accomplish common tasks. Different EDA companies in the committee back these sub-methodologies, and they have a history within the UVM committee. One sub-methodology relies heavily on macros while another insists on using method calls. One sub-methodology relies on creating virtual sequencers, and the other avoids the term all together.

So, where do you start and how do you avoid creating a hodge-podge of idioms and spaghetti code?

An immediately obvious solution is automation. A moderately complex script could generate the frequently used UVM testbench structures based on the RTL design being tested. Examples of such structures include interfaces, agents, drivers, and monitors for standard buses, and standard sequences. Not to forget is the test plan, which forms the basis for measuring the success and completeness of your verification efforts. Arguably, a home-grown script would suffice for one-time use.

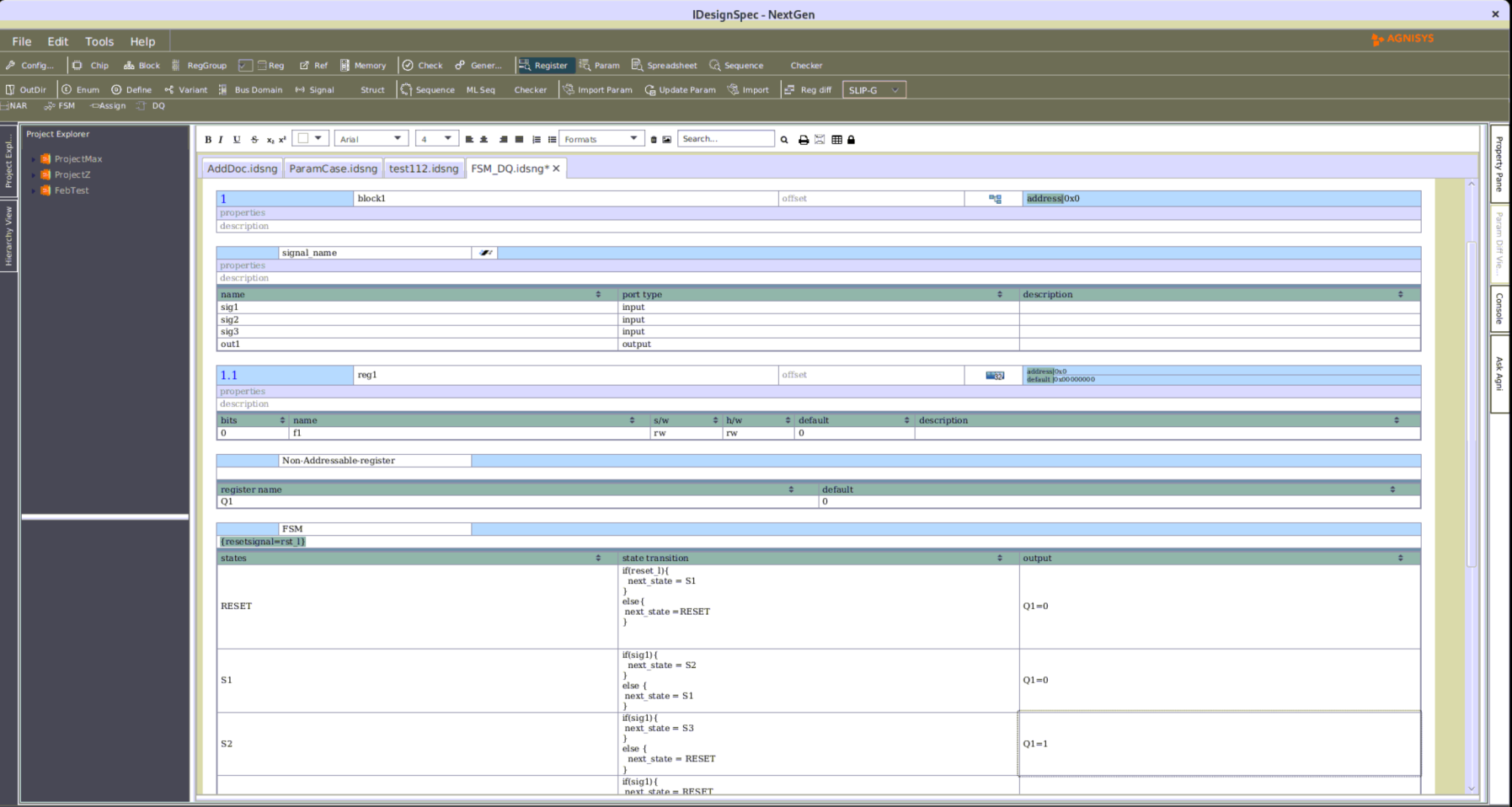

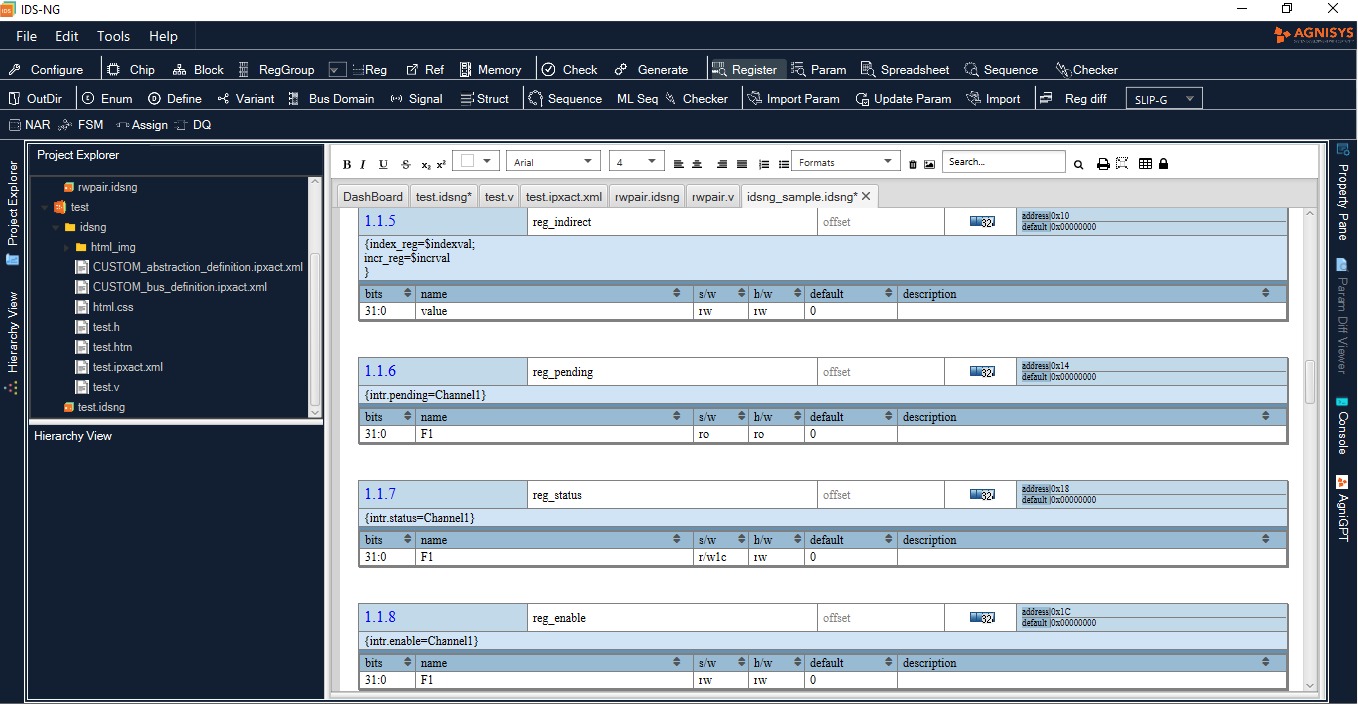

What is not so obvious is that, as specifications change and requirements grow, enhancing and maintaining such a script becomes an arduous task. “Ownership” of such a house-grown tool also might become an issue as people get re-assigned to different projects. Investing in an industry-standard tool, like IDesignSpec with the ARV-Sim module, then becomes a smart choice. If the specification is in a standard, executable format, it significantly aids automation. Again, IDesignSpec comes to the fore –it allows you to create a single register specification in a format such as SystemRDL, Word, Excel, or IP-XACT. From that specification, use the ARV-Sim capabilities to generate all UVM test-bench level components, test plans, and scripts (Makefiles). Having generated the UVM test bench automatically may not be an end in itself –you can use it as a springboard to add additional components like third-party VIPs, checkers, and monitors. And just like that, your initial trepidations, either real or imagined, about using UVM in your verification environment vanish into thin air. If you compare the time and effort saved in creating, debugging, and bringing up a UVM test environment using a well-designed, robust, industry-proven tool, versus doing it all manually, suddenly makes you feel smart –an entirely different feeling from where you might have started with!